Data Provenance Explained: How to Prove Your AI Decisions Are Trustworthy

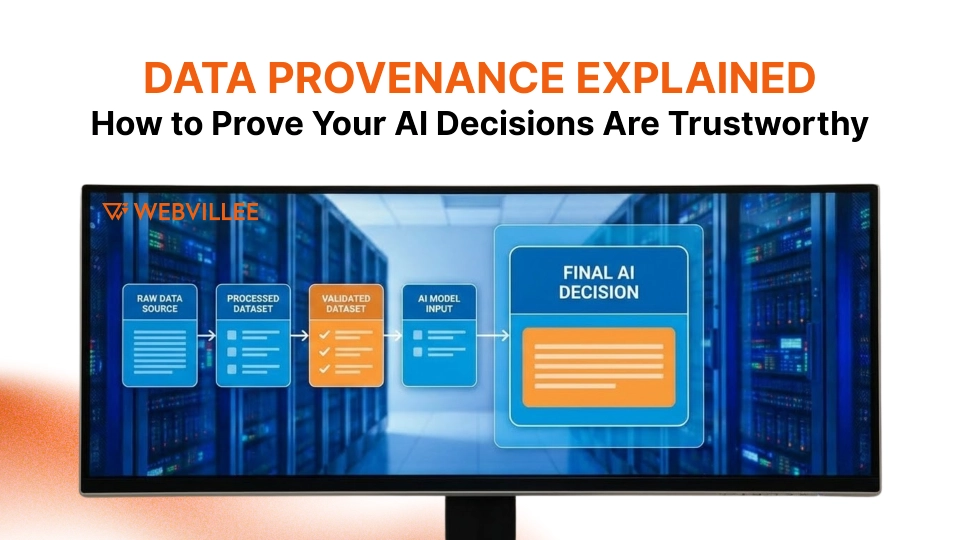

AI data provenance is the comprehensive documentation of where data came from, how it changed through processing, and who handled it across the entire AI lifecycle, enabling organizations to prove their AI decisions are trustworthy and defensible.

Why Trust in AI Decisions Is Under Scrutiny

AI data provenance has become critical because the growing demand for explainable and defensible AI outcomes means saying “the model said so” is no longer acceptable to regulators, customers, or business leaders.

Organizations deploying AI face uncomfortable questions. When a loan application gets denied, customers want to know why. Also when hiring algorithms reject candidates, regulators demand explanations. When fraud detection systems flag transactions, businesses need proof the decision was justified.

The problem is most AI systems operate as black boxes. Data flows in, decisions come out, but the path between input and output remains invisible. This opacity creates risk.

Trust has become a prerequisite for AI adoption. Without it, businesses cannot deploy AI for high stakes decisions. Customers refuse to accept automated outcomes they cannot verify. Regulators block systems that cannot demonstrate fairness and accountability.

What Is Data Provenance and Why It Matters for AI Systems

Data provenance is the complete history of a dataset including its origin, every transformation it underwent, who owned or accessed it, and how it was used throughout the AI system lifecycle.

Think of provenance as a detailed receipt that tracks data from source to final decision. This receipt documents where data started, what happened to it along the way, and who was responsible at each step.

How Provenance Differs From Data Lineage and Metadata

Data lineage tracks the flow of data through systems. It shows what data moved where and when.

Metadata describes data characteristics like format, size, and creation date. It labels what data is but does not explain its journey.

Provenance combines both and adds accountability. It tracks the full story, including transformations, decision points, and human actions that shaped the data. This makes provenance foundational to trustworthy AI systems because it enables reconstruction of exactly how decisions were made.

How AI Decisions Become Questionable Without Provenance

Without AI data provenance, organizations face training data ambiguity, unverifiable sources, and hidden bias that make AI decisions impossible to defend when questioned by stakeholders or regulators.

Training data ambiguity happens when teams cannot identify which specific data influenced model behavior. Did the training set include biased historical decisions? Were data samples representative? Nobody knows because the record does not exist.

Unverifiable Data Sources and Silent Data Drift

AI models trained on data from unknown or poorly documented sources create liability. When data quality degrades over time through silent drift, models make increasingly poor decisions without anyone noticing until damage occurs.

The risks of making high impact decisions without traceability are severe. Organizations cannot:

- Defend decisions during audits or legal challenges

- Identify root causes when AI systems produce harmful outcomes

- Demonstrate compliance with fairness and anti discrimination laws

- Fix problems because they cannot trace issues back to specific data

- Build stakeholder confidence in automated decision making

The Role of Data Provenance Across the AI Lifecycle

AI data provenance must be captured continuously from initial data collection through training and into real time inference to maintain an unbroken chain of custody for every data point that influences decisions.

Provenance in data collection and ingestion establishes the foundation. Teams document where raw data originated, who provided it, under what terms it can be used, and what quality checks were applied during acquisition.

Tracking Transformations During Training and Fine Tuning

During model development, data undergoes numerous transformations. It gets cleaned, normalized, augmented, and balanced. Each change must be recorded with details about what transformation occurred, why it was necessary, and who approved it.

Training processes create additional provenance requirements. Which data subsets trained which model versions? What hyperparameters were used? How did the model change between iterations? This history becomes essential for understanding model behavior.

Maintaining Provenance During Inference and Real Time Decisions

When deployed AI makes live decisions, provenance tracking continues. Systems must log which data the model analyzed, what version of the model made the decision, and what external factors influenced the outcome.

This real time provenance enables organizations to reconstruct specific decisions weeks or months later when questions arise about why the AI acted in a particular way.

How Data Provenance Helps Prove AI Decisions Are Trustworthy

Provenance proves trustworthiness by enabling complete reconstruction of how specific AI decisions were made, demonstrating consistent behavior over time, and supporting thorough audits by regulators and stakeholders.

Reconstructing how a specific decision was made becomes straightforward with strong provenance. Investigators can trace backward from the decision through model inference, training data, and original data sources to verify every step was appropriate.

Demonstrating Consistency and Reliability Over Time

Provenance allows comparison of decisions across time periods. Organizations can prove their AI behaves consistently and does not drift toward unfair or biased outcomes as conditions change.

This consistency evidence becomes crucial during regulatory reviews where officials need confidence that AI systems produce reliable results not just during testing but throughout their operational lifetime.

Supporting Audits, Investigations, and Stakeholder Reviews

When auditors investigate AI decisions, provenance provides the documentation they need without disrupting operations. Every question about data sources, processing steps, or decision logic can be answered with verifiable records.

Stakeholder reviews benefit similarly. Customers disputing automated decisions receive clear explanations. Internal teams investigating model failures quickly identify root causes. Executives gain confidence to expand AI deployment based on proven accountability.

Data Provenance and Regulatory Expectations

Regulators care about data origin and handling because AI data provenance enables them to verify organizations meet fairness, transparency, and accountability requirements without banning beneficial AI applications.

How provenance supports compliance with emerging AI regulations is straightforward. Laws like the EU AI Act and various national frameworks require organizations to document training data sources, demonstrate bias testing, and maintain audit trails.

Provenance frameworks satisfy these requirements by design. They capture exactly what regulators need to verify responsible AI development and deployment.

Preparing for Audits Without Slowing Innovation

Strong provenance lets organizations pass audits quickly because documentation already exists. Teams avoid scrambling to reconstruct historical decisions or explain data choices made months earlier.

This efficiency enables innovation to continue. Engineers work confidently knowing their processes generate compliant records automatically rather than treating compliance as a separate burden that slows development.

Key Components of an Effective Data Provenance Framework

Effective AI data provenance frameworks require source validation to confirm data authenticity, immutable records that cannot be altered, and clear ownership accountability for every data asset throughout its lifecycle.

Source validation and data authenticity checks establish trust at the beginning. Systems verify data comes from legitimate sources, has not been tampered with, and meets quality standards before entering AI pipelines.

Immutable Records and Version Control

Provenance records must be tamper proof. Once created, entries cannot be modified or deleted. This immutability prevents manipulation of the historical record and ensures audit trails remain trustworthy.

Version control tracks every change to data, models, and processes. Teams can reproduce any previous state to investigate issues or validate that specific AI versions behaved correctly under historical conditions.

Clear Ownership and Accountability for Data Assets

Every data asset needs defined owners responsible for its quality, appropriate use, and compliance with regulations. Clear ownership prevents data from becoming “orphaned” with nobody accountable for problems.

Organizations implementing CRM & ERP systems can integrate provenance tracking to ensure enterprise data maintains accountability as it flows through business processes and AI models.

Technical Approaches to Capturing Data Provenance

Organizations capture AI data provenance through automated lineage tracking within data pipelines, using logs, cryptographic hashes, and timestamps for verification while balancing depth of provenance with system performance.

Automated lineage tracking within data pipelines instruments systems to record data movement automatically. Every transformation, join, filter, and aggregation gets logged without requiring manual documentation.

Using Logs, Hashes, and Timestamps for Verification

Technical implementation uses several mechanisms:

- Logs capture detailed events showing what happened to data at each processing step

- Cryptographic hashes create unique fingerprints proving data has not changed

- Timestamps establish exact sequences of events for audit trails

- Digital signatures verify who authorized specific data operations

- Blockchain or distributed ledgers provide tamper proof provenance storage

Balancing Depth of Provenance With System Performance

Comprehensive provenance creates overhead. Logging every detail slows processing and consumes storage. Organizations must decide what level of tracking is necessary without degrading AI system performance.

Practical approaches focus provenance on high risk decisions and critical data while using lighter tracking for routine operations. This balanced strategy maintains trust without making systems unusable.

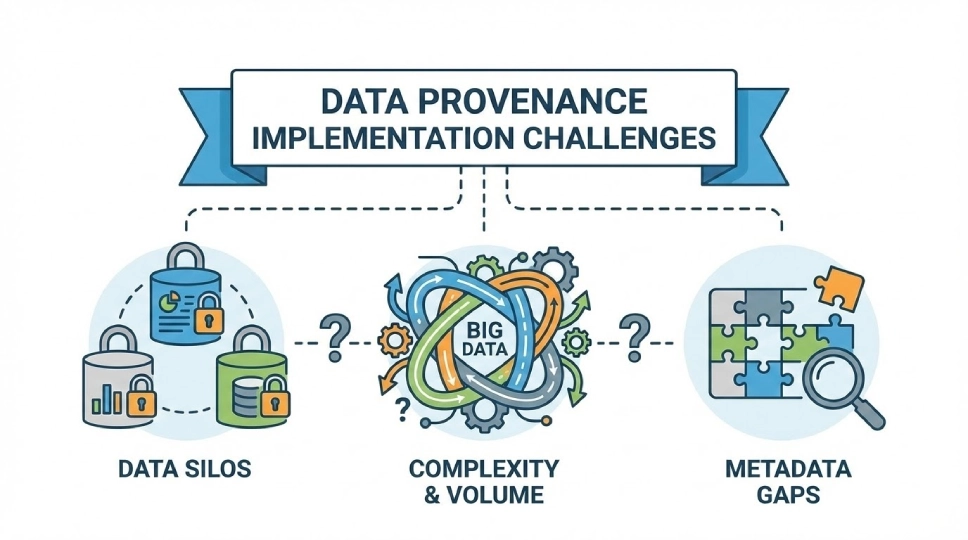

Common Challenges When Implementing Data Provenance

Implementing AI data provenance faces challenges from legacy systems with limited visibility, fragmented data across teams and vendors, and trade offs between transparency and operational complexity.

Legacy systems with limited visibility were not designed to track provenance. Retrofitting old platforms to capture detailed data history requires significant engineering effort and sometimes proves impossible without replacement.

Fragmented Data Across Teams and Vendors

Modern AI depends on data from multiple sources. Internal teams collect some data. External vendors provide other datasets. Cloud platforms host processing. Each fragment follows different provenance standards or none at all.

Unifying provenance across this fragmented landscape requires coordination, standard formats, and agreements about what information each party will document and share.

The Trade Off Between Transparency and Operational Complexity

More detailed provenance means more complexity. Systems become harder to operate. Developers spend time on documentation instead of features. Performance suffers under logging overhead.

Organizations must find the right balance where provenance provides necessary accountability without making AI systems too burdensome to maintain or too slow to meet business requirements.

How Enterprises Use Data Provenance in Real World AI Use Cases

Enterprises apply AI data provenance across customer facing decisions for dispute resolution, risk and fraud models requiring compliance proof, and internal decision support systems under executive scrutiny.

Customer facing AI decisions and dispute resolution benefit directly from provenance. When customers challenge automated decisions, organizations can show exactly what data informed the outcome and prove the process followed fair policies.

Risk, Fraud, and Compliance Driven Models

Financial services use provenance to demonstrate their fraud detection models do not discriminate illegally. Insurance companies prove risk assessments relied on appropriate data. Healthcare organizations show treatment recommendations followed evidence based protocols.

These use cases face intense regulatory scrutiny. Provenance provides the evidence regulators need to approve AI deployment in sensitive domains.

Internal Decision Support Systems Under Executive Scrutiny

Executives making strategic decisions based on AI recommendations want confidence those recommendations rest on solid foundations. Provenance lets them verify that models used current, accurate data and appropriate analytical methods.

This transparency enables leaders to trust AI insights enough to act on them, accelerating data driven decision making throughout the organization.

Measuring the Value of Data Provenance Beyond Compliance

Data provenance value extends beyond compliance by reducing time to resolve AI related incidents, improving model quality through better data understanding, and building long term confidence with users and business partners.

Reducing time to resolve AI related incidents happens because provenance eliminates investigation delays. When problems occur, teams immediately identify which data or processing step caused the issue instead of spending weeks tracing through systems manually.

Improving Model Quality Through Better Data Understanding

Provenance helps data scientists understand training data characteristics more deeply. They discover data quality issues earlier, identify patterns that explain model behavior, and make better decisions about data selection and processing.

This improved understanding translates directly to higher quality models that perform more reliably in production environments.

Building Long Term Confidence With Users and Partners

Organizations known for transparent, well documented AI earn customer trust more easily. Partners feel confident integrating with systems that can prove their reliability. Employees adopt AI tools when they understand and can verify how decisions are made.

This confidence enables faster AI adoption and creates competitive advantage for organizations that invest in provenance early.

How to Start Building Data Provenance Into Your AI Systems

Start building AI data provenance by identifying high risk models requiring it first, defining what traceability level is truly required, and embedding provenance into data and AI workflows early rather than retrofitting later.

Identifying high risk models that need provenance first lets organizations focus limited resources where they matter most. Models making decisions about people, significant financial transactions, or safety critical operations get priority.

Defining What Level of Traceability Is Truly Required

Not every AI decision needs the same provenance depth. Low stakes recommendations might need basic logging. High stakes decisions require comprehensive tracking. Medium risk applications fall somewhere between.

Clear requirements prevent over engineering provenance for simple use cases while ensuring critical applications get sufficient documentation to prove trustworthiness.

Embedding Provenance Into Workflows Early

Building provenance into AI development processes from the beginning costs less and works better than adding it to existing systems later. Design data pipelines with tracking built in. Configure model training to log decisions automatically. Deploy inference systems that capture provenance by default.

Early integration makes provenance a natural part of how teams work instead of an extra burden imposed after the fact.

Why Data Provenance Will Define the Next Phase of Trustworthy AI

AI data provenance is shifting from optional feature to enterprise standard as organizations realize provenance enables explainability at scale and positions AI systems to earn trust rather than just output results.

The transition from optional to standard is accelerating. Regulations increasingly require it. Customers demand it. Business leaders expect it. Organizations that treated provenance as nice to have are discovering it is actually essential.

How Provenance Enables Explainability at Scale

Explaining individual AI decisions becomes manageable with strong provenance. Teams can quickly generate explanations by following the documented data trail instead of manually reconstructing each decision.

This scalability lets organizations deploy AI more broadly while maintaining accountability for every decision the system makes.

Positioning AI Systems to Earn Trust, Not Just Output Results

Future competitive advantage will come from AI systems people actually trust and adopt. Provenance is how organizations demonstrate that trust is deserved.

By investing in provenance now, businesses position themselves as leaders in responsible AI while competitors struggle with opacity, unexplainable decisions, and stakeholder skepticism that limits their AI ambitions.

Key Takeaways for Implementing AI Data Provenance

AI data provenance is the comprehensive documentation enabling organizations to prove their AI decisions are trustworthy by tracking data from origin through every transformation to final outcomes.

Trust in AI has become prerequisite for adoption as regulators, customers, and business leaders demand explainable, defensible decisions instead of accepting black box outputs.

Provenance differs from basic lineage and metadata by combining data flow tracking with accountability, creating complete records of what happened, why, and who was responsible at each step.

Effective frameworks require source validation, immutable records, version control, and clear ownership while balancing comprehensive tracking against system performance requirements.

Organizations start successfully by identifying high risk models first, defining appropriate traceability levels for different use cases, and embedding provenance into development workflows from the beginning rather than retrofitting existing systems.

Contact Webvillee to explore how data provenance frameworks can help your organization build trustworthy, defensible AI systems that earn stakeholder confidence and meet emerging regulatory requirements.